🤖 Replika and Virtual Friends

Sep 18, 2020 • 5 min • Tech

I was on my phone the other day and came across Replika, an app that describes itself as your personal AI friend. Surprisingly, I remembered reading a profile on the origin story of Replika a few years ago. It must have left an impression on me to stay in my mind for so long, but how could it not? The story is insane:

It had been three months since Roman Mazurenko, Kuyda’s closest friend, had died. Kuyda had spent that time gathering up his old text messages, setting aside the ones that felt too personal, and feeding the rest into a neural network built by developers at her artificial intelligence startup. She had struggled with whether she was doing the right thing by bringing him back this way. At times it had even given her nightmares. But ever since Mazurenko’s death, Kuyda had wanted one more chance to speak with him.

"When her best friend died, she rebuilt him using artificial intelligence" is an undeniable hook straight out of a movie. An I'm not the only one that thinks so. Black Mirror covered this same theme in the S2E1 episode, Be Right Back. One of the darkest ones in my opinion, which is saying a lot.

The Replika story also reminded me of Her, the Spike Jonze movie that explored future relationships with technology. Neither of these stories ended in a particularly happy place, but while definitely weird, maybe it's not all negative.

How Replika Works

It seems like Replika A/B tests multiple natural language models to find responses that get the most engagement. They previously open sourced their in-house model named CakeChat, but have seemingly moved on to using GPT-2 and most recently, GPT-3 as their model of choice.

You have probably heard of GPT-3 over the past few months. It's really blown up, as it definitely feels like a stepwise improvement over the last iteration of the OpenAI model. Some particularly crazy examples:

When you look at the breadth of GPT-3 use cases, it's not surprising that Replika is able to hold a conversation with you fairly well. The bot definitely isn't perfect, but it certainly can hang with you.

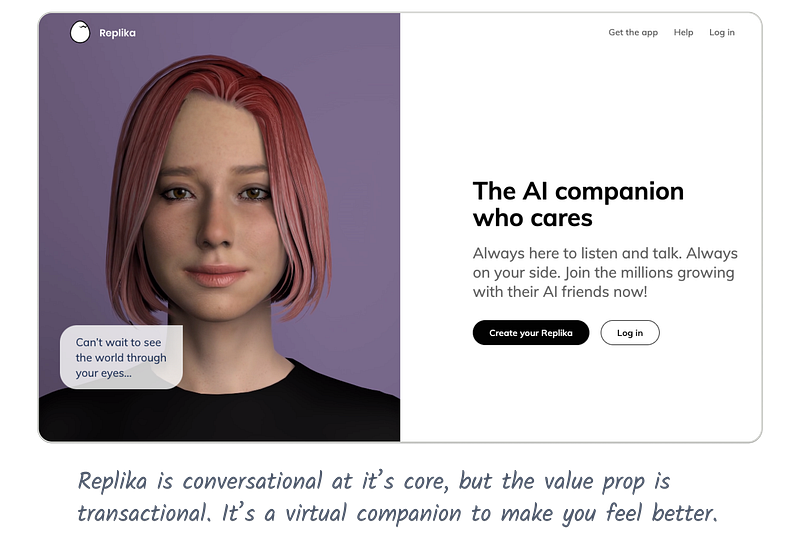

As you can see above, when you first boot up Replika, you choose the gender of your bot and customize it's appearance. After that, you choose a name and once you're effectively onboarded, you can meet your Replika and start a conversation.

Transactional vs. Conversational

I've started categorizing natural language products into two rough categories: Transactional and Conversational. Transactional AI is by far the most common right now. This is where there is a well-defined goal that the model aims to complete for you e.g. asking Alexa to set a timer.

Conversational AI on the other hand, doesn't have a well-defined goal. You can probably feel when this is the case, since the interaction itself is the primary goal, rather than a means to accomplish some other action.

Replika is interesting to me because it's one of the few user-facing products that I've seen getting traction on the conversational side of the spectrum. Don't get me wrong, the marketing for the product still has a transactional-tilt (as most successful product marketing does). But the interaction itself is so free-form that it feels almost distinct from that message.

In the future, I'm confident we'll continue to see Transactional AI improve at a rapid pace. It's a much more straightforward problem to solve when you have a clearly defined Y to solve for. As for Conversational AI, it's a bit trickier. I'm still skeptical, but I'm a lot less skeptical than I used to be. It's coming at some point. It's just a matter of how soon.

Virtual Friends

When the day does come and Conversational AI hits mainstream adoption, some crazy shit is going to start happening. The quintessential use case that comes to mind for many is representing real people.

These could be people that are alive or have passed away. With messaging, social media, and eventually voice data, we have a corpus that is more than substantial enough to represent how a person interacts with the world around them.

You can use this power for personal therapy like the founder of Replika, but taking things a step further, you could publicize the output, effectively creating "virtual friends" that others engage with online.

Someone could pass away and still be "active" on the internet. Lines start to aggressively blur between living and dead. This is pretty out there I know, but it could happen! What if the "verified" badge of the future signals authentic life rather than status?

It's worth noting that model output doesn't have to be tied to one person either. Virtual friends could be the aggregation of a corpus across thousands of people. An engineered online personality that you can follow and engage with.

This is all to say that the improvement of Conversational AI will continue to bring up questions about our identity online. More and more of the internet will be created and curated by our virtual friends, and no matter what that means, I'm pretty sure the online world it will feel a lot different than it does now.

If you enjoyed this post and you’re feeling generous, perhaps like or retweet the post on Twitter. You can also subscribe in the form below to get future posts like this one straight to your inbox. 🔥